Which Of The Following Is Not A Described Idps Control Strategy?

The following is an excerpt from the volume Introduction to Data Security: A Strategic-Based Approach written by authors Timothy J. Shimeall, Ph.D. and Jonathan M. Spring and published by Syngress. This department from affiliate 12 explains the importance of intrusion prevention and detection, as well equally its pitfalls.

WHY INTRUSION DETECTION

An [intrusion detection and prevention arrangement] IDPS is one of the more important devices in an organization's overall security strategy. There is too much data for any human analyst to inspect all of it for evidence of intrusions, and the IDPS helps alert humans to events to investigate, and prioritize human being recognition efforts. An IDPS besides serves an important auditing function. If the machines that form the technological courage of the frustration and resistance strategies are misconfigured, the IDPS should be positioned to detect violations due to these errors. Furthermore, some attacks will be for a flow of fourth dimension before in that location is any available patch or mitigation.

An IDPS may be able to discover traffic indicative of the new set on, either as soon equally a signature is made bachelor or if the attack traffic is generally anomalous. An IDPS has many deportment available when responding to a security event. Generating an alarm for homo optics is a mutual activeness, just it can also log the activeness, record the raw network information that caused the alert, attempt to terminate a session, alter network or system access controls, or some combination thereof [5]. If managed well, the different rules stratify the deportment into unlike categories related to the severity of assault, reliability of rule, criticality of target, timeliness of response required, and other organizational concerns. Once the notifications are stratified, the man operator can prioritize response and recovery actions, which are the topic of Chapter 15.

An IDPS may be able to discover traffic indicative of the new set on, either as soon equally a signature is made bachelor or if the attack traffic is generally anomalous. An IDPS has many deportment available when responding to a security event. Generating an alarm for homo optics is a mutual activeness, just it can also log the activeness, record the raw network information that caused the alert, attempt to terminate a session, alter network or system access controls, or some combination thereof [5]. If managed well, the different rules stratify the deportment into unlike categories related to the severity of assault, reliability of rule, criticality of target, timeliness of response required, and other organizational concerns. Once the notifications are stratified, the man operator can prioritize response and recovery actions, which are the topic of Chapter 15.

Returning to our walled-city metaphor from Chapter 5, we already have our static defenses -- moats, walls, and gates -- as well as the more active defenses, such as guards who inspect people coming into the urban center. An IDPS is like to a lookout man posted above the city gate and/or in a belfry nearby. If the guard gets overrun past a all of a sudden unruly mob, the guard cannot telephone call for help. The sentry provides a basic defense force-in-depth function of recognizing that a problem has occurred and an warning needs to be issued. Too similar an IDPS, the sentry may have some immediate corrective actions available, such as telling the gate operator to close it temporarily or in some castles there are grates over the entryway from which a watch could pour boiling oil to deter invaders who breached the outer defenses. But like an IDPS, while these responses may exist effective stop-gap measures, they are not sustainable methods of network management. The most important functions are to alert the authorities so that a recovery of security can begin, and to keep a record of how the incident occurred so a meliorate arrangement tin can be put in to place going forward.

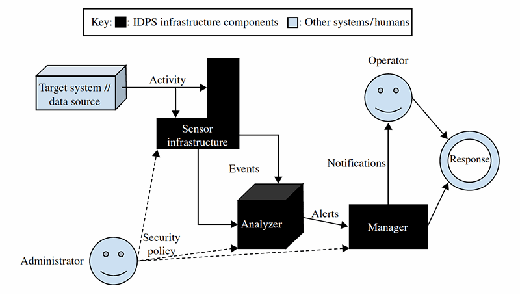

The three major components of an IDPS and how they collaborate with the relevant organizational components are summarized in Effigy 12.ane. The IDPS sensor infrastructure observes activity that information technology normalizes or processes into events, which the analyzer ingests and inspects for events of interests. The manager processes deals with the events appropriately. In the tower sentry metaphor, these components are all within the one human lookout. In an IDPS, they tin exist role of one figurer or distributed to specialized machines.

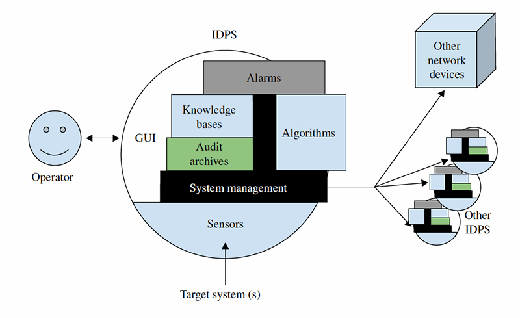

Figure 12.2 displays the internal components of an IDPS in more than detail. The operator interacts with the system components through the graphical user interface (GUI); some systems apply a command-line interface for administration in improver to or instead of a GUI. The alarms correspond what responses to make. The knowledge base is the repository of rules and profiles for matching against traffic. Algorithms are used to reconstruct sessions and understand session and application data. The audit archives store by events of interest. System management is the glue that holds information technology all together, and the sensors are the basis for the organization, receiving the data from the target systems.

NETWORK INTRUSION DETECTION PITFALLS

A network-based IDPS (NIDPS) has many strengths, but these strengths are also frequently its weaknesses. A NIDPS strength is that the organization reassembles content and analyzes the data against the security policy in the format the target would process it. Some other strength is that the data is processed passively, out of ring of regular network traffic. A related force is that a NIDPS tin can be centrally located on the network at a choke point to reduce hardware costs and configuration management. Withal, all of these benefits also introduce pitfalls, which will exist discussed serially. Furthermore, in that location are some difficulties that any IDPS suffers from simply due to the fact that the Net is noisy, and so differentiating security-related weird stuff from general anomalies becomes exceptionally difficult. For some case benign anomalies, see Bellovin [half-dozen].

The post-obit sections are non intended to devalue IDSs or to give the impression that an IDS is non part of a good security strategy. An IDS is essential to a complete recognition strategy. The following pitfalls are remediated and addressed to varying degrees in available IDPSs. Cognition of how well a potential IDPS handles each event is important when selecting a organisation for use. Despite advances in IDPS technology, the following pitfalls do nonetheless arise occasionally. It is important for defenders to keep this in listen: no one security strategy is infallible. Knowing the ways in which each is more than probable to fail helps design overlapping security strategies that account for weaknesses in certain systems. For these reasons, we present the following common pitfalls in IDPSs.

Fragmentation and IP Validation

One of the pitfalls of reassembling sessions as the endpoints would view them is that endpoints tend to reassemble sessions differently. This is non only true of applications. This is true of the fundamental fabric of the Internet, the TCP/IP (Transport Control Protocol/Internet Protocol) protocol suite. To handle all possible bug that a packet might take while traversing the network, IP packets might be fragmented. Furthermore, if packets are delayed they might be resent past the sender. This leads to a combination of situations in which the receiver may receive multiple copies of all or part of an IP package. The RFCs (request for changes) that standardize TCP/IP behavior are silent on how the receiver should handle this possibly inconsistent data, and and then implementations vary [7, p. 280].

Bundle fragmentation for evading IDS systems was laid out in detail in a 1997 U.Southward. armed forces written report that was publicly released the following twelvemonth [8]. Evasion is one of three general attacks described; the other 2 attacks confronting IDSs are insertion and denial of service (DoS). Insertion and evasion are both caused, in general, by inconsistencies in the way the TCP/IP stack is interpreted. DoS attacks confronting IDPSs are non limited to TCP/IP estimation, and are treated throughout the subsections that follow. DoS attacks are possible through bugs and vulnerabilities, such as a TCP/IP parsing vulnerability like the teardrop attack [9], just when this chapter discusses DoS on IDPSs it refers to DoS specific to IDPSs. DoS attacks such as the teardrop assail are operating system vulnerabilities, and so such things are not IDPS specific, even though many IDPSs may run on operating systems that are affected.

The general trouble sketched out by the packet fragmentation bug is that the strength of the IDPS -- namely, that it analyzes the data against the security policy in the format the target would process -- is thwarted when the attacker tin force the IDPS to process a different packet stream than the target will. This can be due to insertion or evasion. For example, if the IDPS does not validate the IP header checksum, the attacker can send breathy attack packets that volition initiate false IDPS alerts, because the target organization would drop the parcel and not actually be compromised. This insertion attack tin be more than subtle. IP packets have a time-to-live (TTL) value that each router decrements by i earlier forwarding. Routers volition driblet an IP parcel when the TTL of the packet reaches 0. An assailant could transport the man responder on lots of confusing, errant clean-upwardly tasks if the TTL of packets are crafted to reach the IDPS, but exist dropped before they reach the hosts [viii]. And if an attacker knows your network well enough to manipulate TTLs like this, it is technically hard to prevent. The IDPS would take to know the number of router hops to each target host it is protecting -- a direction nightmare.

Another result of Ptacek and Newsham's study [8] was some research into how different operating systems handle different fragmentation possibilities [10]. Some NIDPS implementations now utilise these categories when they process sessions, and besides include methods for the NIDPS to fingerprint which method the hosts information technology is protecting employ so the NIDPS tin can employ the advisable defragmentation method [11]. This method improves processing accurateness, merely management of this mapping is not trivial. Further, network accost translation (NAT) and Dynamic Host Configuration Protocol (DHCP) will cause inconsistencies if the pool of computers sharing the IP infinite does not share the same processing method. This subtle dependency highlights the importance of a holistic understanding of the network architecture -- and keeping the architecture simple enough that it can be holistically understood.

Awarding Reassembly

NIDPSs perform reassembly of application data to keep states of transactions and accordingly procedure certain application-specific details. The precise applications reassembled by an IDPS implementation vary. Common applications like File Transfer Protocol (FTP), Secure Shell (SSH), and Hypertext Transfer Protocol (HTTP) are likely to be understood. Down the spectrum of slightly more specific applications, Gartner has published a business definition for "next-generation" IPSs that requires the system understand the content of files such as portable document format (PDF) and Microsoft Office [12]. The power to process this large variety of applications when making decisions is a pregnant strength of IDPS devices, as most other centralized network defense devices are inline and cannot spend the fourth dimension to reassemble application information. Proxies tin, but they are usually application specific, and so lack the broader context that IDPSs commonly tin leverage.

The large and myriad awarding-parsing libraries required for this job introduce a lot of dependencies into IDPS operations, which can lead to some common pitfalls. First, IDPSs require frequent updates as applications modify and bugs are stock-still. If the IDPS was just purchased to fill a regulatory requirement and is ignored after, it quickly becomes less and less effective as parsers fall out of date.

Even in the best case where the system is up to date, many of the variable processing decisions that were described before related to IP fragmentation are relevant to each application the IDPS needs to parse. The various web browsers and operating systems may parse HTTP differently, for example. This is less a problem in application handling, because equally long as the IP packets are reassembled correctly, at to the lowest degree the IDPS has the correct data to inspect. But due diligence in testing rules might signal that different rules are needed non just per application, only ane for each mutual implementation of that application protocol. Bugs might be targeted in specific versions of application implementations, further ballooning the number of required rules. So far, NIDPSs themselves seem to be able to handle the large number of rules required, although rule management and tuning are arduous for system and security administrators.

Out-of-Band Issues

Although an IDPS is located on a central role of the network, it may non be in the direct line of network traffic. An inline configuration is recommended just when IPS functionality volition be utilized, otherwise an out-of-band configuration is recommended [1]. When an IDS is running out of band information technology has some benefits, just information technology too introduces some possible errors. If the IDS is out of band, and so if the IDS is dropping packets no network services will suffer. This is a benefit, except that the security squad so needs to configure the IDS to alert them when it is dropping packets then they can take that into account. A more difficult problem to find is if the network configuration that delivers packets to the IDS develops errors, either accidental or forced by the attacker, that result in the IDS not receiving all the traffic in the showtime identify. There is a similar trouble with other resources exhaustion issues, whether due to attacks or only to a large network load, at the transport and application layer.

Inline architectures have to make harder decisions about what to practise when the IPS resources are exhausted. Despite the best planning, resource exhaustion will happen occasionally; if null else, adversaries attempt to cause information technology with DoS attacks. Whether the IPS chooses to brand network functioning endure and drop packets, or information technology chooses to make its analysis suffer and not audit every packet, is an important decision. The ambassador should brand the chance assay for this decision articulate. This is an example of a failure command situation [2]. In general, a fail-secure approach is recommended; in this example the IPS would fail-secure by dropping packets. This arroyo fails deeply because no attack tin penetrate the network considering of the failure, unlike the other option.

In either case, the resources exhaustion failure yet causes damage. The IDPS cannot log packets information technology never reads, and if its disk infinite or processor is wearied, then it cannot go on to perform its recognition functions properly. Therefore, accordingly resourcing the IDPS is important. On big networks, this will likely require specialized devices.

Centrality Problems

Since the NIDPS is centrally located, it has a convenient view of a large number of hosts. However, this fundamental location combined with the passive strategy of IDPS also means that data can exist hidden from view. Primarily this is due to encryption, whether it is IPSec [13,fourteen], Transport Layer Security (TLS) [15], or application-level encryption like pretty good privacy (PGP) [16]. Encryption is an encouraged, and truthfully necessary, resistance strategy (Affiliate viii). However, if awarding data is encrypted, and then the IDPS cannot inspect it for attacks. This leads to a fundamental tension -- attackers will too encrypt their attacks with valid, open encryption protocols to avoid detection on the network. One strategy to keep to notice these attacks is host-based detection. The host volition decrypt the data, and tin perform the IDPS function there. Notwithstanding, this defeats the centralized nature of NIDPS, and also thwarts the broad correlation abilities that but a centralized sensor can provide. And as groups like the Electronic Frontier Foundation encourage citizens with programs similar "HTTPS Everywhere" [17], in addition to the button from the security community, the prevalence of encryption will but increase.

On a controlled network it is possible to proxy all outgoing connections, and thereby decrypt everything, send it to the IDPS, and then encrypt it again before it is sent along to its destination. Information technology is recommended to implement each of these functions (encryption proxy, IDPS) on a dissever machine, as each are resources-intensive and have dissimilar optimization requirements [18].

Base-rate Fallacy

The final trouble that IDPSs encounter is that they are trying to discover inherently rare events. False positives -- that is, the IDPS alerts on benign traffic -- are impossible to avert. If there are too many false positives, the analyst is not able to discover the real intrusions in the alert traffic. All the alerts are as alerts; there is no fashion for the analyst to know without farther investigation which are false positives and which are truthful positives. Successful intrusions are rare compared to the scope of how much network traffic passes a sensor. Intrusions may happen every day, but if the intrusions get common it does non take an IDPS to notice. Network performance just plummets as SQL Slammer (Structured Query Linguistic communication [SQL] is a common database language. SQL Slammer is so named because it exploits a vulnerability in the database and so reproduces automatically through scanning for other databases to exploit), for example, repurposes your network to scan and ship spam. Simply that is not the sort of intrusion nosotros demand an IDPS to find. And hopefully all of the database administrators and firewall dominion sets have learned enough from the early 2000s that the era of worms flooding whole networks is passed [19]. Information technology also seems likely criminals realized there was no money in that kind of assault, but that stealing money tin exist successful with stealthier attacks [20]. Defenders demand the IDPS to recognize stealthy attacks.

Unfortunately for security professionals, statistics teaches the states that it is specially difficult to discover rare events. Bayes' theorem is necessary to demonstrate this difficultly, only permit's consider the example of a medical exam. What we are interested in finding is the faux-positive rate -- that is, the take a chance that the medical test alerts the doctor the patient has the condition when the patient in fact does non. We demand to know two facts to calculate the faux-positive rate: the accurateness of the test and the incidence of the affliction in the population. Let's say the examination is 91% accurate, and 0.75% of the population actually has the condition. We can calculate the take chances that a positive examination result is actually a faux positive as follows: Where Pr is the probability of the event in brackets ([ ]) and the vertical bar ( | ) between two events can be read as "given," it means that calculating an effect is dependent on, or given, some other. For example, the probability that the patient does not have the condition given the exam event was positive could exist written Pr[healthy|positive]. This is the probability the test upshot is an incorrect alarm. Therefore:

Pr[healthy│positive] = Pr[positive│good for you] Ten Pr[healthy]

Pr[positive│ill] Ten Pr[sick] + (Pr[positive│healthy] X Pr[healthy])

There volition be a subtle divergence here. We are not calculating the faux-positive rate. That is simply Pr[positive|salubrious]. We are computing the risk that the patient is healthy given the test alerted the doctor to the presence of the status. This value is arguably much more than important than the false-positive rate. The IDPS human operator wants to know if action needs to be taken to recover security when the IDPS alerts information technology has recognized an intrusion. That value is Pr[healthy|positive], what we're trying to become to. Let'south call this value the warning error, or AE. Let'southward simplify the preceding equation by calling the false-positive charge per unit FPR, and the true-positive charge per unit TPR. The probabilities remaining in the equation will be the charge per unit of the condition in the population, represented by the simple probability that a person is ill or healthy:

AE = FPR X Pr [ healthy ]

TPR X [Pr[ill] + (FPR X Pr[salubrious])

Let's substitute in the values and calculate the AE in our example. The exam is 91% accurate, so the FPR is 9% or 0.09, and the TPR is 0.91. If 0.75% of people have the condition, then the probability a person is healthy is 0.9925, and sick is 0.0075. Therefore:

AE = 0.09 X 0.9925 / 0.91 10 0.0075 + (0.09 10 0.9925)

AE = 0.089325 / 0.006825 + (0.089325)

AE = 92.9%

Therefore, with these weather, 92.9% of the time when the test says the patient has the condition, the patient will in fact be perfectly healthy. If this result seems surprising -- that with a 9% faux-positive rate that nigh 93% of the alerts would be false positives -- you are not solitary. It is a studied human being cognitive error to underestimate the importance of the basic incidence of the tested-for condition when people make intuitive probability evaluations [21, 22]. One can bring intuition in line with reality by keeping in mind that if there are not very many sick people, it volition be difficult to find them, especially if the examination for something is relatively complicated (like sickness or calculator security intrusions). It is the proverbial needle-in-a-haystack problem.

At that place has been some research into the technical aspects of the furnishings of the base-rate problem on IDPS alert rates [23]. The results are not very encouraging -- the judge is that the false-positive rate needs be at or beneath 0.00001, or ten−5, before the alarm charge per unit is considered "reasonable," at 66% true alarms. Nonetheless, in the context of other industrial control systems, the studies of operator psychology indicate that in fields such as power plant, newspaper mill, steel mill, and large ship operations, the operator would disregard the whole alarm system as useless if the true alarm charge per unit were only 66%.

The base-rate fallacy provides two lessons when considering an IDPS. Kickoff, when an IDPS advertises its false-positive charge per unit every bit "reasonable," keep in mind that what is reasonable for a useful IDPS is much lower than is intuitively expected. Second, the base-rate trouble has a lot to exercise with why signaturebased functioning is the predominant IDPS operational mode. It has much lower false positives, and then even though signatures may miss many more events, they can achieve sufficiently low faux-positive rates to exist useful. Given how noisy the Net is, anomaly-based detection is still largely a research projection, despite the alluring business case of a system that simply knows when something looks wrong. The following two sections describe these two modes of operation.

An additional important point is that a grasp of statistics and probability is of import for a network security analyst. For a treatment of the base-charge per unit fallacy in this context, see Stallings [24, ch. 9A]. For a good introductory statistics text that is freely available electronically, see Kadane [25].

MODES OF INTRUSION DETECTION

In wide strokes, NIDPSs can operate with signature-based or anomaly-based detection methods. Practically, both modes are used because they have important benefits and uses. However, it is useful to understand the dissimilar benefits, and limitations, of each style of detection, even though a single IDPS that is purchased will likely possess both signature-based and anomaly-based detection capabilities to some extent. For example, Snort (an open-source IDS, see the preceding sidebar) has been extended to perform some anomaly detection capabilities in various ways [28]. This categorization is similar to the reason to empathise NIDPSs as a separate kind of device from the diverse types of firewalls as described in Chapter 5, even though devices on the market oft do not strictly attach to any category. Categorizing is helpful for the network defender to conceptualize and plan a coordinated defence force strategy.

Network Intrusion Detection: Signatures

Signatures are one of ii modes that IDPSs use to detect intrusions. The idea is that the NIDPS detects a known pattern, or signature, of a specific malicious activity that is exhibited inside the traffic. Signature-based detection is simpler to implement than bibelot-based detection, and a skilful signature volition take a lower, more consequent false-positive rate. However, signatures can be easy to evade and require the attack to be known earlier they can be written and the attack detected. Rules tin can usually exist written fairly quickly once an set on is known, simply someone, somewhere, must first observe it without a signature.

Signatures are quite flexible. A NIDPS aims to inspect the content of all the traffic that traverses the network. This is opposed to firewalls, which tend to simply audit the headers for the packets. This increased scope is arduous, and partly explains why NIDPSs can have performance bug. Information technology likewise is the reason that they are such a useful tool.

![FIGURE 12.3 - An example snort rule. The different features and fields of the rule are labeled. Source: Roesch et al. [30].](https://cdn.ttgtmedia.com/rms/onlineImages/intro_information_sec_ch12_page13_graphic1_mobile.png)

To understand what a signature can accomplish, it may be helpful to understand the beefcake of a rule. The de facto standard format for a signature is its expression in a Snort rule. Snort is an open up-source NIDPS that began development in 1998, and is primarily signature based. Due to its popularity, many other NIDPSs accept or use Snort-format rules. A rule is a single expression of both a signature and what to practice when it is detected. Figure 12.3 displays a sample text Snort rule and annotates the components (Compiled Snort rules exist, and these are in binary format, unreadable past humans. They have a different format than this text dominion, which will not be discussed hither, but the function is essentially the same). For a complete dominion-writing guide, see the Snort documentation [29].

Figure 12.3 is not every bit complex every bit it may appear at commencement glance. In that location are three sections in this rule: ane for what kind of rule this is and packets to look at, one for what to wait for in the packets, and a third for what to say once the rule finds a lucifer. These sections are enclosed in light-gray dashed lines. The first section is the rules header, which is the more structured of the three. This department declares the rule type. Figure 12.3 is an "warning," one of the basic built-in types.

Rule types define what to do when the rule matches, which we'll return to later. The rest of the rule header will friction match confronting parts of the TCP/IP headers for each bundle. This substantially performs a parcel-filter, like a firewall access command list (ACL; run into Chapter 5), even though the syntax and capabilities are unlike than a firewall. IP addresses can be matched flexibly, either with variables or ranges. Although not demonstrated in this rule, ports can also exist specified with ranges or variables. IP addresses tin also be specified equally "any," for any value matches. The pointer indicates direction, and < > (not pictured) ways either direction matches. Although the showtime versions used to allow it to be either → or ←, that soon became confusing. Now only → is allowed, so the source IP is e'er on the left and the destination is always on the right.

The contents of the rule all become within parentheses, with different parts separated by semicolons. This is where the flexibility of an IDPS rule can really exist leveraged. Effigy 12.3 demonstrates two of the simpler sections -- payload detection and general -- each with only their quintessential option demonstrated. Payload detection dictates what to look for in parcel payloads, and information technology besides offers some options to describe how and where to look for the content, which are non demonstrated in the figure. The content field is the quintessential option, as it defines the string that the signature will look for. In the example, here is a particular sequence of bits that can be used to inject malicious data into a particular email process in a known mode, and this rule looks for that sequence of bits. Since this sequence is in the application data, it is clear why such signatures can be easily evaded with encryption -- encrypting the payload would alter these $.25 while in transit. The general department includes descriptors about the dominion whenever an alert is sent or packets logged, then that the analyst does not take to call back that the hex sequence E8COFFFFFF is item to a buffer overflow in the Internet Message Access Protocol (IMAP), for email.

As the amount of available software has grown, and then accept the number of vulnerabilities. At the same time, older vulnerabilities do non go away -- at least not quickly. If a vulnerability just afflicts a Windows 98 auto, most IDPSs in 2013 do non all the same demand to track or cake it. The basic premise is that if a vulnerability does not be on the network the IDPS is protecting, it does not need signatures to find intrusions resulting from it. But this in practise provides piddling respite, and the number of signatures that an IDPS needs to track grows rapidly.

There is a 2nd way to view this threat mural, and that is to ask what vulnerabilities attackers are commonly exploiting, and preferentially defend confronting them. At that place is always the take a chance that a targeted attack will use an unknown, zilch-day vulnerability to notwithstanding penetrate the system undetected, but that is a different grade of attacker. There is a fix of common, lucrative criminal malicious software that exploits a modest set of known vulnerabilities, and these are the attacks that are all but guaranteed to hit an unprepared network. There are only about 10 vulnerabilities of this mass-exploit quality per twelvemonth, against but a handful of applications [31]. The best way to defend against such common exploits may non be an IDPS, still, an IDPS should be a device that helps the defenders decide what exploits are most common on their network, and thus which exploits deserve the special attention to prevention, such every bit the steps described in Guido [31].

Network Intrusion Detection: Anomaly Based

Bibelot-based detection generally needs to work on a statistically significant number of packets, considering any packet is only an anomaly compared to some baseline. This need for a baseline presents several difficulties. For one, anomaly-based detection will non be able to discover attacks that tin be executed with a few or even a single packet. These attacks, such every bit the ping of death, do still be [32], and are much meliorate suited for signature-based detection. Farther difficulties arise because the network traffic ultimately depends on human being beliefs. While somewhat predictable, human beliefs tends to be child-bearing plenty to crusade NIDPS anomaly detection problem [33].

While signature-based detection compares behavior to rules, anomaly-based detection compares behavior to profiles [1]. These profiles withal need to define what is normal, like rules need to be defined. Notwithstanding, anomaly-based profiles are more like white lists, because the contour detects when behavior goes outside an acceptable range. Unfortunately, on nearly networks the expected set of activity is quite broad. Successful bibelot detection tends to be profiles such as "notice if ICMP (Cyberspace Command Bulletin Protocol) traffic becomes greater than 3% of network traffic" when information technology is usually but 1%. Application-specific data is less commonly used. This arroyo can detect previously unknown threats, however, it tin can also exist defeated past a careful attacker who attempts to alloy in. Attackers may non exist careful enough to alloy in, just the especially careful adversaries are all the more important to catch. In general, adversaries with sufficient patience can e'er alloy in to the network's beliefs. Therefore, anomaly detection serves an important purpose, but it is non a panacea, especially not for detecting advanced attackers.

About the writer:

Dr. Timothy Shimeall is an Adjunct Professor of the Heinz College of Carnegie Mellon Academy, with teaching and inquiry interests focused in the surface area of information survivability. He is an active instructor in information security direction and data warfare, and has led a variety of survivability-related independent studies. Tim is also a senior member of the technical staff with the CERT Network Situational Awareness Group of Carnegie Mellon's Software Technology Institute, where he is responsible for overseeing and participating in the development of analysis methods in the area of network systems security and survivability. This work includes development of methods to place trends in security incidents and in the development of software used by computer and network intruders. Of particular interest are incidents affecting dedicated systems and malicious software that are effective despite common defenses. Prior to his time at Carnegie Mellon, Tim was an Associate Professor at the Naval Postgraduate School in Monterey, CA.

Jonathan Bound is a member of the technical staff with the CERT Network Situational Awareness Group of the Software Engineering Institute, Carnegie Mellon University. He began working at CERT in 2009. He also serves as an adjunct professor at the Academy of Pittsburgh's School of Information Sciences. His current enquiry topics include monitoring cloud calculating and DNS traffic assay. He holds a Primary'south degree in information security and a Bachelor'due south degree in philosophy from the University of Pittsburgh.

This was last published in September 2014

Which Of The Following Is Not A Described Idps Control Strategy?,

Source: https://searchsecurity.techtarget.com/feature/Introduction-to-Information-Security-A-Strategic-Based-Approach

Posted by: schnabelnumpat.blogspot.com

0 Response to "Which Of The Following Is Not A Described Idps Control Strategy?"

Post a Comment